Artificial intelligence and related technologies have begun to shape important parts of the digital economy and affect core areas of our increasingly networked societies. Whether it be transportation, manufacturing, or social justice, AI has the potential to deeply impact our lives and transform our futures in ways both visible and hidden. The promise of AI-based technologies is enormous, and benefits range from efficiency gains to unprecedented improvements in quality of life. The challenges, however, are equally staggering, for example creating uncertainty surrounding the future of labor and the shifts in power to new structures outside the control of existing governance and accountability frameworks. More specifically, the uneven access to and impact of AI-based technologies on marginalized populations run the disturbing risk of amplifying global digital inequalities. These groups include urban and rural poor communities, women, youth, LGBTQ individuals, ethnic and racial groups, people with disabilities – and particularly those at the intersection of these identities.

A complex set of issues exist at the intersection of AI development and the application divide between the Global North and the Global South. Some of the thematic areas include health and wellbeing, education, and humanitarian crisis mitigation, as well as cross-cutting themes such as data and infrastructure, law and governance, and algorithms and design, among others. We are examining the core areas and cross-cutting themes through research, events, and multi-stakeholder dialogues. The following materials are informed by the multitude of these efforts, which incorporates perspectives from a wide array of experts in this emerging field.

We’ve created this site as a means to showcase the many dimensions of AI and inclusion as a part of our broader Ethics and Governance of AI initiative carried out in conjunction with the MIT Media Lab. It reflects what we’ve learned from our collective work on AI and inclusion over the past year, with partners such as the Institute for Technology and Society Rio and collaborators from the Global Network of Centers. It includes a number of resources produced from the the Global Symposium on AI and Inclusion, which convened 170 participants from over 40 countries in Rio de Janeiro last November to discuss the most significant opportunities and challenges of AI. The research, findings, and ideas presented throughout the webpage both illuminate key takeaways from our work and will serve as a roadmap for our continued work on AI and inclusion intersected with our AI and governance efforts.

Within the site you will find foundational materials on the themes of AI and inclusion including descriptions of overarching themes, key research questions, the initial framing of a research roadmap, as well as some of the most salient opportunities and challenges identified pertaining to AI, inclusion, and governance.

We're eager to hear and learn from you. Please meet the team behind this collaborative effort here, who can also connect you with our global collaborators and friends.

When considering the social impact of AI, conceptualizing inclusion may become even more difficult because AI systems are powered by pattern recognition and classification, which, broadly speaking, often drive exclusionary social processes. Because of this, AI has feedback effects on the notion of inclusion itself. There are questions that we should be asking in order to further shape our own views and understandings as to how to conceptualize AI. This reading list is meant to serve as a starting point for exploring the ways in which we may think about the very notion of social inclusion/exclusion itself in the age of AI, as well as the complex ways in which autonomous systems interface with various dimensions of inclusion and questions that arise at this intersection.

Reading List

In preparing for the Global Symposium on AI and Inclusion, the organizers conducted a survey amongst participants about the challenges, opportunities, application areas, and dimensions of inclusion of interest to be further explored at the event. The Pre-Event Survey Responses document is a brief look at the survey responses, with a particular focus on comparisons between the Global South and the Global North. In total, we captured 150 responses to the survey, and categorized regions as the following: Global South (Latin America/Caribbean, Asia, Sub-Saharan Africa, and Northern Africa), and Global North (Europe, North America, Oceania). A few main geographic discrepancies in the perception of the greatest challenges and opportunities of AI that emerged from the survey. Though these survey responses draw from a limited sample, they demonstrate the differing approaches to AI and its societal implications across geographic region, highlighting the need for greater cross-cultural collaboration and dialogue on the development, deployment, and evaluation of AI.

Pre-Event Survey Responses

The 170 attendees of the Global Symposium on AI & Inclusion stand together in front of the the Museum of Tomorrow in Rio de Janeiro.

Andres Lombana, a fellow at the Berkman Klein Center, greets Ezequiel Passeron, executive director of Faro Digital. The two are both participants in Digitally Connected, a collaboration between UNICEF and the Berkman Klein Center to analyze digital and social media trends among young people globally.

ITS Rio Director Ronaldo Lemos speaks with Maja Bogataj Jančič, director of the Intellectual Property Institute, Ljubljana, Slovenia and Alek Tarkowski, PhD. director of Centrum Cyfrowe, Warsaw, Poland and co-founder and coordinator of Creative Commons Poland.

Chinmayi Arun, research director at the Center for Communication Governance and assistant professor of law at National Law University (NLU), both in Delhi, moderates a session on advancing equality in the Global South.

A panel responds to the keynote speech delivered by Ansaf Salleb-Aouissi, lecturer in computer science at Columbia University, on the history of AI. She said we can use lessons learned during the early years of AI to promote more inclusive AI today.

Keynote speaker Nishant Shah, Dean of Research at ArtEZ University of the Arts, Hamburg, Germany, speaks on inclusion in the age of AI. Shah called for considering human and technology factors together when trying to make AI more inclusive.

Nagla Rizk, founding director of Access to Knowledge for Development (A2K4D) in Cairo, emphasized that making AI more inclusive requires new datasets developed by citizens of emerging economies, and reducing reliance on datasets released by government agencies.

Alex Gakuru, executive director of the Content Development and IP (CODE-IP) Trust in Kenya, passes the microphone.

Ciira Maina, Senior Lecturer at Dedan Kimathi University of Technology in Nyeri, Kenya, said among other things that we need to disseminate educational materials and other resources that promote AI awareness, particularly in the Global South.

Participants mingle during a reception and poster session featuring some of the participants’ projects, as well as an exhibition by Harshit Agrawal – a research assistant and artist at the MIT Media Lab.

Carlos Affonso, director of ITS Rio, thanks the panelists for their participation in a session on data and economic inclusion and introduces the subsequent breakout sessions.

Participants walk from the main auditorium to a breakout session. Participants chose two breakout session themes out of ten total based on their interests and nature of work.

The breakout session focused on data and infrastructure in the age of AI convenes in the Museum of Tomorrow’s Exhibition Lab and makerspace on the symposium’s second day.

The breakout session on business models in the age of AI, moderated by Susan Aaronson, a professor and senior fellow at George Washington University, convenes in the museum courtyard.

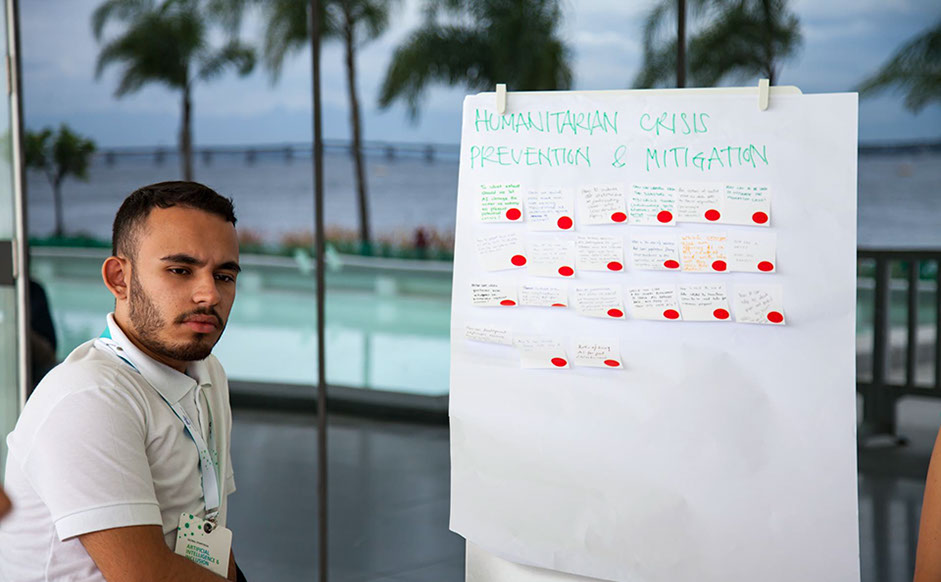

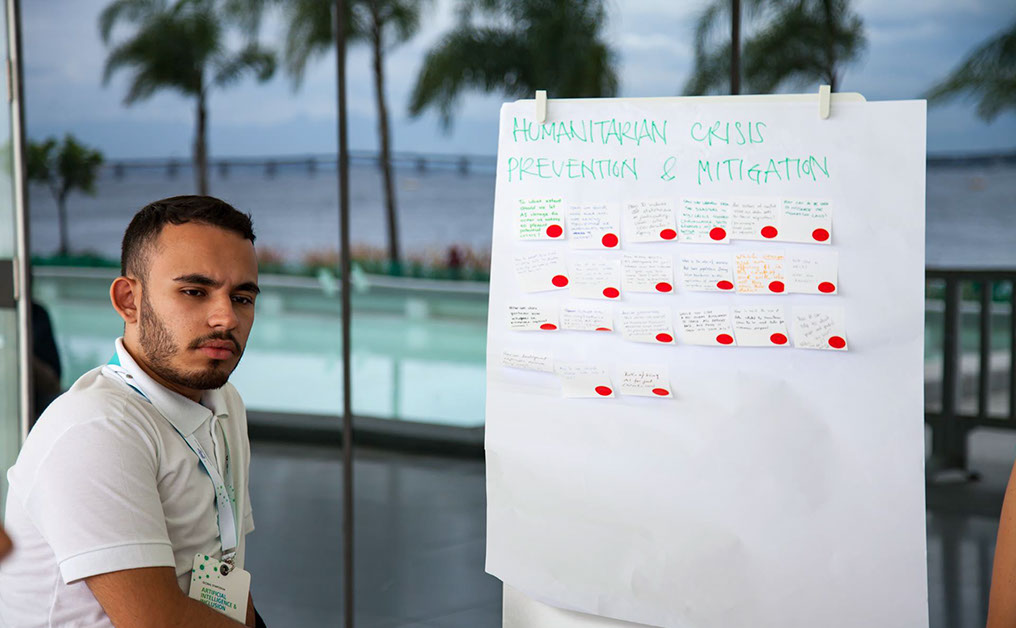

Daniel Calarco de Oliveira, president of International Youth Watch, listens during the breakout session on Humanitarian Crisis Prevention & Mitigation. The session focused on how AI technologies might help prevent humanitarian crises and speed response times.

Kathleen Siminyu, data scientist at Africa's Talking and a Co-Organizer of the Nairobi Chapter of Women in Machine Learning and Data Science, walks past by a Museum of Tomorrow sign.

João Duarte, data science manager at Somos Educação, Brazil; Kọ́lá Túbọ̀sún, Founder of the Yorùbá Names Project, Nigeria; Debora Albu of ITS Rio; and Pranesh Prakash, Policy Director of CIS Bangalore, India, walk from the auditorium to a cluster group session.

Agustina del Campo, director of the Center for Studies on Freedom of Expression and Access to Information (CELE), Universidad de Palermo, Argentina, engages with other members of a cluster group meeting.

A cluster group meets on the second day of the symposium in the Museum of Tomorrow mezzanine to identify major challenges and opportunities of AI.

Luis Camacho Caballero, research projects manager at Pontifical Catholic University of Peru, and Michael Best of United Nations University-Computing and Society work with their cluster group to identify major global opportunities presented by AI.

Julia Cavalcanti (ITS Rio), Leyla Keser, Founder and Director of the Istanbul Bilgi University IT Law Institute, and Stephane Hilda Barbosa, Researcher at the Getulio Vargas Foundation Law School in São Paulo (FGV/SP), converse during a cluster group session.

Lucas Santana, head of marketing at Evelle Consultoria & Desabafo Social, Salvador, Brazil, and Monique Evelle, CEO of Evelle Consultoria, walk together during a break on the second day of the symposium.

Mimi Onuoha, artist and researcher at the Eyebeam Center, a nonprofit in Brooklyn, NY, presents her talk about the need for art to interpret AI, and for members of diverse professions to have access to AI.

ITS Rio Director Ronaldo Lemos speaks at the public event on possible future trajectories of AI to create a more just and inclusive society.

Sandra Cortesi, director of Youth and Media at the Berkman Klein Center, discusses the impact of AI-based technologies on children and young people. Cortesi emphasized throughout her talk how technologies have radically transformed the ways in which youth perceive and interact with the world around them.

Two participants walk in the public section of the Museum of Tomorrow after the closing remarks.

10 - 26

<

>

The Global Symposium, co-hosted on behalf of the Network of Centers by ITS Rio and the Berkman Klein Center for Internet & Society, involved over 170 participants from more than 40 countries around the world and took place over the course of three days (November 8-10, 2017) at the Museum of Tomorrow in Rio de Janeiro, Brazil. The Symposium was organized with the support of the Ethics and Governance of Artificial Intelligence Fund, International Development Research Centre (IDRC), and the Open Society Foundations, in collaboration with the Museum of Tomorrow.

For more information on the logistics and themes of the Global Symposium on AI & Inclusion, please see the links to the right.

Grounding conversations about AI and inclusion within a shared, contextualized understanding of fundamental concepts at play is crucial, as doing so allows us to begin conceptualizing best approaches to mitigating potential risks and maximizing the benefits of AI based on that shared understanding of AI’s societal interplay. The following three overarching themes and critical issues emerged both from the research leading up to as well as the conversations that took place at the Global Symposium on AI and Inclusion.

The video on the right features both Ansaf Salleb-Aouissi (Columbia University), who frames the history of AI and how we should view inclusion starting from a technical perspective, and Nishant Shah (ArtEZ University of the Arts), who discussed inclusion in the age of AI, calling for a breakdown of the dichotomy between the human element and the technological element.

Exploring the intersection of “AI and Inclusion”

It is necessary to critically engage with the ways in which we define the fundamental concepts of AI as well as inclusion. As Nishant Shah argues, we must reckon with the history and impact of AI-based technologies and conceptions of inclusion, and draw attention to their mutually constitutive intersection in order to look beyond just computation to the actual lived realities of the computed. Simultaneously, we must grapple with the definition of inclusion in a technological context. From a technical development process perspective, Ansaf Salleb-Aouissi articulates the need to recognize a “4D Framework” — develop, de-identify, decipher, and de-bias — in developing AI that is both inherently more inclusive and that helps advance social inclusion. As we continue to examine all aspects of AI and inclusion, we must recognize the need for a new vocabulary to discuss the novel ways in which AI and inclusion interact.

Reframing “Inclusion” to embrace self-determination

It is important to understand the influence of western values within the AI discourse and how this may contribute to bias in global systems — technological and social. One important criticism of the traditional concept of inclusion is that it places the burden of “performing” inclusion on the underrepresented communities themselves. Rather than simply incorporating these communities into global conversations about AI, or “throwing money” at engineers within these communities to support inclusive development, policymakers must enable these communities to actively drive the discussion and deployment that shape their experiences. Self-determination constitutes an alternative to or amendment of the inclusion paradigm, offering agency and space for underrepresented individuals to represent themselves and guide the systems and policies that affect them.

Rewriting old AI narratives to encompass new AI capabilities

We must also reconcile the differences in speed at which the AI discourse progresses and the speed at which new AI capabilities are developed. As technological advancements are often made at a more rapid pace than developments in the discourse surrounding them, there is a tremendous potential to exacerbate existing divides and create new ones. This gap points to an urgency to collaborate quickly and meaningfully across regions and sectors in order to understand and mitigate potential risks at the intersection of AI and inclusion.

Through our research and convening efforts we have identified a series of open research questions which serve as a starting place for forward-looking research road map. These research questions are closely linked to stages of technical development for AI systems. For that reason, we have clustered these questions into a matrix, vertically by the stages of developing an AI system and horizontally by mechanisms for intervention. The structure of the matrix, in addition to a brief description of each category, is outlined below for reference, along with key questions for each cell in the matrix. For more detailed information on the methodology for categorizing research questions, greater background on where they are drawn from, and the full research matrix, please refer to our full research memo.

Can we study past AI evolutionary cycles to predict future AI trajectory?

To what extent should we be looking for technical solutions for social problems?

To what extent does the development of new categories as a way to forward inclusion further entrench repressive notions? How does AI deal with this?

How can we incorporate an international human rights framework into AI development?

How do different communities define “Artificial Intelligence” and how does it influence varying perceptions including fear and excitement surrounding the future of AI?

Explaining AI behavior and decision-making is not a trivial task -- how do we trust AI without always fully understanding its functionality, and what are the implications of that "blind" trust?

How can we align incentives between companies and activists to make profitable and beneficial AI?

What are ways to empower software experts from the Global South? Funding?

How can youth be stakeholders and more involved in building the AI world?

How do we include more individuals in AI systems without erasing difference through normalization and universalization?

Can business conciliate their aim of collecting data with protecting the data of users?

Is it possible for us to de-situate AI from the undeniable structure of societies based on surveillance capitalism?Give AI applications/uses a public good? Should it be publicized and insofar controlled?

How will we make certain that we work together collaboratively in the age of AI to define and defend the public interest? How can we meaningfully collaborate?

What does the current global framework of AI production/use look like? Who are the major players, what are their initiatives, and how can we lift up more grassroots initiatives?

How can we improve education systems -- especially in engineering -- to promote more inclusive and ethical AI? Should this improvement in engineering education include moving away from logic back into mathematics and will this curriculum require new forms of engineering?

Can we work with makerspaces to include AI into their offerings? Those places can host online courses in groups (marginalized youth) and can be hubs for cutting edge digital education & creativity

How do we democratize access to education, particularly technology-focused education, in the context of lower-income countries that speak little or no English?

How do education tools for expanding knowledge and use of AI currently reach populations globally, and how can expanding access to educational resources positively reflect back in AI systems?

How does privilege manifest itself in the means of education chosen by different groups/stakeholders? What is the prevailing narrative about AI and technology in the classroom, and who shapes it?

Is there a need for a (legal) definition of AI? Is this definition defined at a local, national, or universal level? Is it binding? What stakeholders decide on the definition?

How can we harmonize data protection regulations and the rights to explanation with complex AI models that are not self explanatory and are nonlinear as deep neural networks? How can the right of data removal be articulated in the AI context without causing undertilting and misrepresentation of class?

How can we force non-democratic countries to abide by the governance systems of AI and what are the implications of a lack of global guidelines/standards?

How do we include AI ethics into the global policy frameworks we build?

What will labor policies look like when most of the population works in informal conditions brought on by the AI age? How can these policies best protect workers?

As AI is going to be able to predict diseases, how do we mitigate violations of privacy and bioethics that discriminate against individuals based on existing conditions or even their predisposition to health conditions?

AI is a hard design material due to its dynamic nature. How can we design something that is constantly changing? How do we explore “what-if” scenarios of unknown?

Who is “the reasonable” man we should configure AI systems around? Does such a person exist? If so, what does this person look and act like?

What are ways that we can use algorithms, particularly in search engines, to disseminate legitimate helpful health information that promotes positive health behavior?

In what respect can businesses from the Global South provide AI applications that businesses from the Global North cannot?

When and how does AI lead to price discrimination or “personalized pricing?”

What are the kinds of human-auditable control mechanisms that can be built into decision making algorithms?

Can we develop technical models (test cases or databases) to ensure that the AI & Inclusion discourse progresses?

Defining the Development Stages of an AI System (Horizontal):

- Design: This stage includes both the technical design of AI-based technologies (e.g. how data will be collected in the model, the target audience of an AI tool) as well as design of systems that govern AI and the implications that must be considered before moving on the the development process.

- Development: The development stage is the production phase of an autonomous system that follows the design process. Questions in the development category pertain to the building of more inclusive tools/methods into AI-based technologies, frameworks of AI development, and what AI tools are being developed for whom.

- Deployment: The deployment stage covers the distribution, utilization, ubiquitousness, and implementation of AI-based technologies within society at multiple levels including the local, national, and global ecosystems.

- Evaluation/Impact: The evaluation/impact phase encompasses the measurement and understanding of the impact of AI technologies, including ways to evaluate the effects of autonomous systems on different agents within society.

Defining the Mechanisms for Intervention (Vertical):

- Defining and Framing (“Back to First Principles”): This encompasses questions examining the fundamental ways in which concepts related to AI and inclusion are defined.

- Bridge-Building: This mechanism contains questions related building infrastructures such as networks and liaising between multiple stakeholders.

- Educating: These questions highlight both the education of AI-based technologies as well as the impacts that AI may have on education.

- Tool-Building: This mechanism contains questions pertaining to the building and usage of tools that use AI technologies.

- Policy-Making: These questions pertain to the sets of principles, guidelines, laws, or regulatory frameworks that govern AI.

When it comes to prioritizing research questions as well as proposing solutions derived from them, our work on AI and inclusion suggests that it is vital to distill the greatest opportunities and challenges decision-makers face while grappling with AI-based technologies, particularly in Global South contexts. Although a number of action items and constructive approaches are warranted, three primary considerations emerged as integral to addressing the opportunities and challenges at the intersection of AI and inclusion found in the research questions. Please use the text box to the right to explore themes in these opportunities and challenges.

Efforts to answer these questions may differ by method, expertise, and region. Therefore, there is a need to devise specific sets of solutions for specific types of problems concerning AI-based technologies and their implications. A broad-strokes approach to formulating solutions for AI and inclusion challenges is ineffective, as the complex nature of problems at this intersection call for a targeted, multivalent approach. Meanwhile, clustering similar research questions and issues may serve as a way to harmonize discussions surrounding AI and inclusion, aligning normative goals using the levers of individual agency and/or ecosystem-driven approaches.

The temporal aspect of identifying issues and formulating solutions was at the crux of the conversation on seizing opportunities presented by AI while identifying effective solutions to potential problems. Specifically, AI’s rapid growth and multifaceted societal implications warrant a reconceptualization of the time structure of the AI research agenda. We cannot simply “act now,” nor can we afford to “wait for the time it takes to conduct research;” rather, we must do both simultaneously. This approach, of course, must account for different temporalities and goals for different sets of problems and solutions, modeling itself on larger social movements that successfully build momentum and involvement on global and local levels without following a traditional chronological agenda commonplace in academia.

In terms of collaboration, all questions should ideally be explored with a diverse group of individuals from varying sectors and geographic regions in order to break down constrictive silos. However, certain questions in particular necessitate a co-research approach by nature; these types of questions include, among others: how to expand funding for Global South initiatives; the ways in which behavioral expectations vary geographically; the democratization of AI education; and the global governance of AI systems. Working towards true and organic interdisciplinarity encourages more meaningful integration of varying perspectives that may yield more inclusive AI development and a less hierarchical global AI power structure.

As we consider future opportunities and challenges related to AI & inclusion, it is vital to continue incorporating global perspectives so that we may progress into a truly inclusive future. To this, we have interviewed a number of key global players working on these issues for their input in 120 seconds. Interviewees include, among others, Carlos Affonso, Director of ITS Rio, and Nagla Rizk, Founding Director of Access to Knowledge for Development (A2K4D). The full set of videos and additional resources are listed below.

Learn more:

- Global Network of Internet and Society Research Centers

- AI-themed Berkman Buzz

- Outputs from related BKC events:

- The following are resources (in Portuguese) suggested by ITS Rio to further explore the topic of AI & Inclusion.

This project is supported by the Ethics and Governance of Artificial Intelligence Initiative at the Berkman Klein Center for Internet & Society. In conjunction with the MIT Media Lab, the Initiative is developing activities, research, and tools to ensure that fast-advancing AI serves the public good. Learn more at https://cyber.harvard.edu/research/ai.

The team at the Berkman Klein Center working on issues related to AI & Inclusion includes (click on each photo to learn more):

Urs Gasser

Executive Director

Amar Ashar

Assistant

Director of

Research

Ryan Budish

Assistant

Director of

Research

Sandra Cortesi

Director of

Youth & Media

Elena Goldstein

Project Coordinator

Levin Kim

Project Coordinator

Jenna Sherman

Project Coordinator

Key collaborators include the Institute for Technology and Society Rio, which is currently the main coordinator of the Global Network of Centers and the hosts of the November 2017 Global Symposium on AI & Inclusion. The team at ITS Rio working on AI & inclusion includes, among many others, the following:

Carlos Affonso Souza

Co-Founder and Director

Fabro Steibel

Executive Director

Ronaldo Lemos

Co-Founder and Director

Celina Bottino

Project Director

Beatriz Laus Nunes

Project Assistant and Researcher

Eduardo Magrani

Coordinator of

Law and Technology

For more information about the network of experts, practitioners, policymakers, and technologists who have contributed to the dialogue on AI & inclusion, as well as the donors that have supported it, please see the Global Symposium on AI & Inclusion website.